The fixed point number representation and its arithmetic will be one of the important skill set of any DSP engineer. Today I gonna talk in brief about what is Fixed Point Representation and how to use it.

Introduction

Basically arithmetics or any numerical computations are done via CPU (aka ALU - Arithmetic Logic Unit). However for certain arithmetics involving floating numbers (numbers with decimal point) a more specialized hardware is required - called FPU (Floating Point Unit). This act as a kind of co-processor to handle floating computation. During a floating operation the CPU generates TRAP and schedules the operations to the FPU and receives the result back.

Almost all the desktops (or PCs) have in build FPUs to support the these kinds of operation. However in Embedded systems or DSPs, its rare to find one, because of the factors like cost, size etc.

So now, how to handle floating arithmetics? In most of the cases the chipset manufacturers (ARM, TI etc) provides routines to achieve the same. These are the software implementation to emulate the floating point operation. But still this won't be sufficient, especially because of the speed of such emulation could effect the overall system performance. Now this the point where Fixed Point numbers comes handy.

Before getting into fixed point numbers, generally on computers, any number esp integers are represented in 2's complement format. That means any positive number will be represented in its binary format and negative number will be its 2's complement (one added to its complement).

The below table shows number and its 2's complement

This was the story of integers, the representation is bit different for floating points, on most of the modern computers they are represented in IEEE 754 standard [1]. However in fixed point representation we shall use 2's complement approach to represent a floating point number.

Welcome 'On-board'

Now lets dive into fixed point representation. Generally a floating number can be divided into two parts - a magnitude part and a fractional part. In fixed point representation we allocates sufficiently large number of bits to represent the number (to reduce the quantization error) for both magnitude and fractional part.

For example lets take 3.0 and analyze its binary values

As you can notice as we divide the decimal number by 2, the decimal point of the binary moves one point to the right, keeping the same binary representation and vice-versa. Decimal point shifts if we divide or multiply by 2 in binary or in case of decimal by 10.

Now lets talk about 'Q' format, where Q represents the number of Q format. Qf format gives the number of bits that are used to represent the fractional part. For example Q15 could be convey the meaning like 15 bits are used to represent the fractional part (out of 16 bit or 32 bit or more word length ). Here word length means the register size (like 8, 16, 32, 64 etc). Qm.f - where it represent both magnitude and fractional part, for example Q1.15 - 1 - magnitude bit and 15 fractional bits. Also the same could be used to show the sign bit as well.

The number of bits required to represent the fractional part depends upon the resolution/precision one wanted to achieve (incase of Single Precision floating representation one may need minimum 23 bits to represent the fractional part). For example with one bit we can have resolution of 0.5 (1/2^1). With 2 bits we can get resolution of 0.25 (i.e. 1/2^2) and so on. One should be careful while selecting the number of bits for fractional part to faithfully represent the number (with minimum rounding error). Thus to represent a fractional number you need to multiply by 2^x (where x denotes the number of bits - Qx). i.e, 0.142 in Q15 could be represented as 4653 in integer or 1001000101101 in binary representation.

Now lets move on see how the effect of mathematical operation on Q format.In case of addition/subtraction of two Q format numbers the resultant Q format will be same as of the addends (Qm + Qm = Qm+1). Addition and subtraction could be done with numbers of same Q format. Also one should take care of the overflow that can occur while addition.

Incase of multiplication Qm with Qn the resultant Q format shall be Qm+n. For division the resultant shall be Qm-n. As a last note its developers responsibility to keep track of the changes in the Q format in the code.

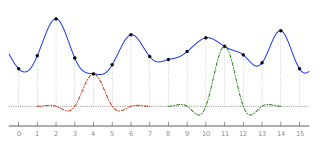

Next time we shall take an example filter and implement it floating point and then in fixed point to clearly understand the representation.

Till then keep Hac-King...

Also check this link for further research.